Contents tagged with Thoughts

-

Anti-Patterns for Software Features: Pony, Unicorn, Baby

Pony

A feature that someone, who is not part of the actual implementation, demands for the wrong reasons. For example, because of a desire to follow some current trend, political maneuvering, an unhealthy fascination with technology, or maybe infatuation with his/her intelligence.

Unicorn

A feature with unrealistic requirements. Low-cost, high-performance, with perfect user experience, bug-free, delivered yesterday.

Baby

A feature that a member of the project team wants to implement – or even worse – has already implemented. The emotional attachment makes it hard to abandon the “baby”, even if it would be better for the overall project.

-

Thinking About the Costs of a Software Feature

(This article was inspired by parts of my talk “Things I wish I had learned earlier as a developer” at the Developer Week 2022 in Nuremberg, Germany)

More than just coding

The total cost of a software feature comprises more than just the time spent on writing the code. When you think about the steps from the first idea to the release of the product, various contributing factors come to mind:

- The work necessary for turning a rough sketch of an idea into a viable concept

- What exactly does the feature do? And what not?

- What is the actual benefit for the users?

- How are users supposed to use it? In what context?

- Time spent on planning the work

- Who is available and has the skill set to implement the feature?

- Does the feature influence / depend on other features? Does this force a certain order?

- Is this a risky feature that better should not be started shortly before the coding freeze prior to a software release?

- Effort that goes into preparing the coding part of the development

- Which technology / framework / library should be used?

- “Buy vs. Build”: What are advantages/disadvantages?

- Are the licenses of all required components known and, most importantly, compatible?

- Time spent on writing the code

- “Known knowns”: Writing the code you know you need for the planned feature.

- “Known unknowns”: Finding the best solution by trying out which of the different imaginable approaches is best.

- “Unknown unknowns”: Dealing with unexpected issues regarding performance, reliability, usability, or user experience – sometimes you only find out when “the real thing” is running under realistic conditions. Also: running into bugs in tools / frameworks / libraries beyond your control.

- Testing the software

- Do you write unit tests? And/or integration tests?

- How do you test the UI?

- What are the economics regarding automatic/manual tests?

- Documentation

- How much external and/or internal documentation do you need?

- Can you get away with not writing documentation?

- Who can write the documentation? In what quality?

Wait, there’s more

A feature can also create costs after the release. Some features more, others less.

- Fixing bugs

- You need to reproduce the bug and preferably write a failing test.

- For critical bugs, you may have to stop your current work immediately for a hotfix.

- For a long-existing bug, fixing the bug may break expected behavior that other people have worked around.

- Worst of all: There is always a chance > 0% that the bug is caused by something beyond your control, and that the bug either may take a long time or may not be possible at all.

- Dealing with feedback

- You need to determine whether you are dealing with valid concerns or the opinion of a loud minority.

- Does the feedback lead to extending or changing the feature?

- Managing change

- Changes need to be communicated to customers – there is a high probability that, no matter how much effort you put into communication in the past, certain people will be surprised by the change.

- Does the change involve some kind of migration? Manual migration may require documentation with step-by-step instructions. An automatic migration, e.g., of configuration data, may have to deal with a variety of different settings as the result of repeated upgrades or manual changes.

- Dealing with roadmap issues

- Users of your software view existing features, including the feature you just added, as “the way things are intended”. For example, in terms of how data is processed or what the user interface looks like. If future features follow a different philosophy, this needs to be communicated. And you may have to deal with habits your users have formed because of your feature.

- A quick decision while developing a feature (e.g., what a single click or a specific hotkey does) may be short-sighted and block future development. This either requires a workaround or managing change (see above).

- Handling end-of-life

- Do you simply remove the feature? Do you add a configuration switch and keep it switched off by default?

- Are existing users still able to perform their specific workflow? If switching to a new workflow is too complicated, or maybe not even possible, customers may remember the other shortcomings of your software and switch to competing products.

- Always remember that no matter how specific and little-known a feature is, there is a probability that somebody uses and relies on this feature. You may never hear any feedback, even after announcing the removal (who reads release notes, after all). But once the feature is removed, chances are that somebody is upset and lets you know.

Where to go from here: The great filter

The cheapest feature is the one that is not implemented without anybody missing it. If you feel a feature is important enough to start work on it, you should first answer one simple question:

What’s the real-life scenario?

When being asked to explain the purpose of a proposed feature, are you able to go beyond “one can do X with it”? Are you able to tell a believable story with a realistic protagonist in a certain context, who encounters a problem that can be solved by using the feature? And does this story make sense when telling it to other people?

If not, that feature may be a candidate for not spending budget on it.

Want more?

If you liked this non-technical article, you may like the other articles of the “Thoughts” category, e.g.,

-

UI/UX for Devs: An Illustrated Mental Model for Empathy

In a previous blog post I wrote about how mental models help you understand how something works. These models in your mind explain what did happen in the past and provide a reasonable (but not always reliable) prediction for will happen in the future. Interestingly, mental models can do that even though they are simplified, incomplete, and often enough simply wrong.

Empathy also has been a topic on my blog; I wrote about what it is and why it is important and why it is good for you, too.

In this article, I combine the two topics and present you my personal mental model of empathy. Note that it is a mental model, not the mental model for empathy. As such, it is, well… simplified, incomplete, and probably wrong. Still, it is “good enough” for many purposes and hey, it comes with pictures!

To start things off, imagine you have developed a non-trivial application as a single developer.

You, the developer

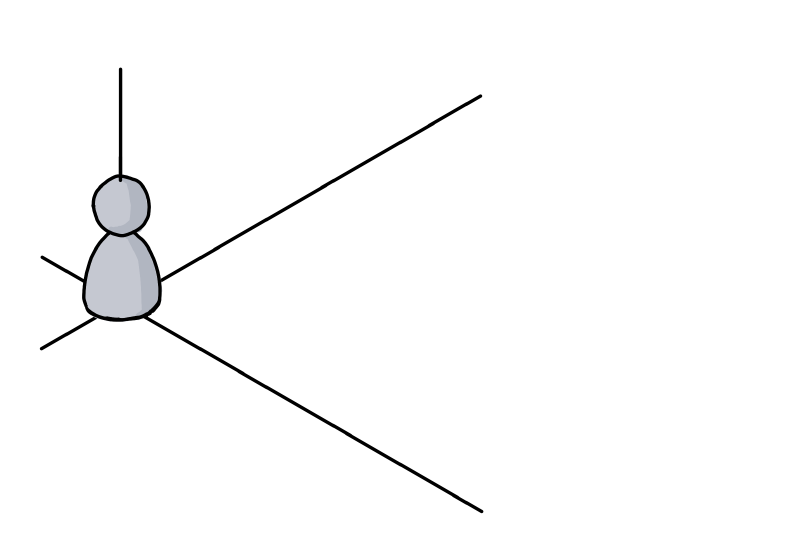

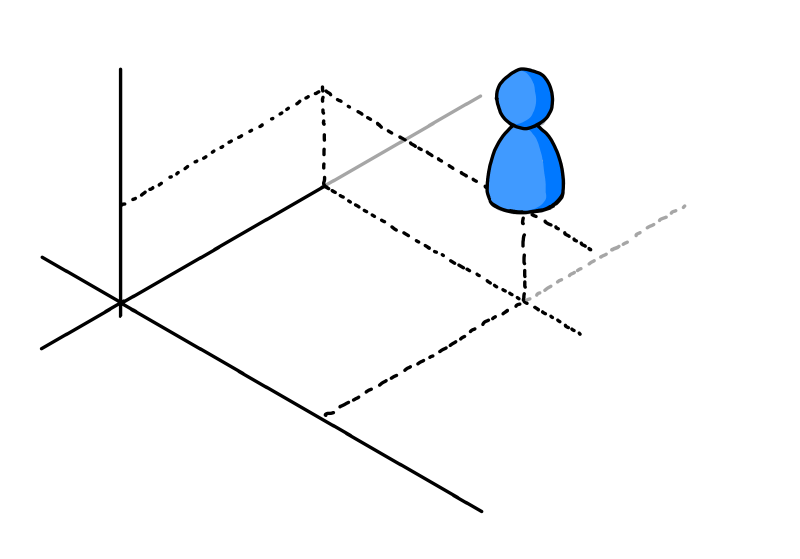

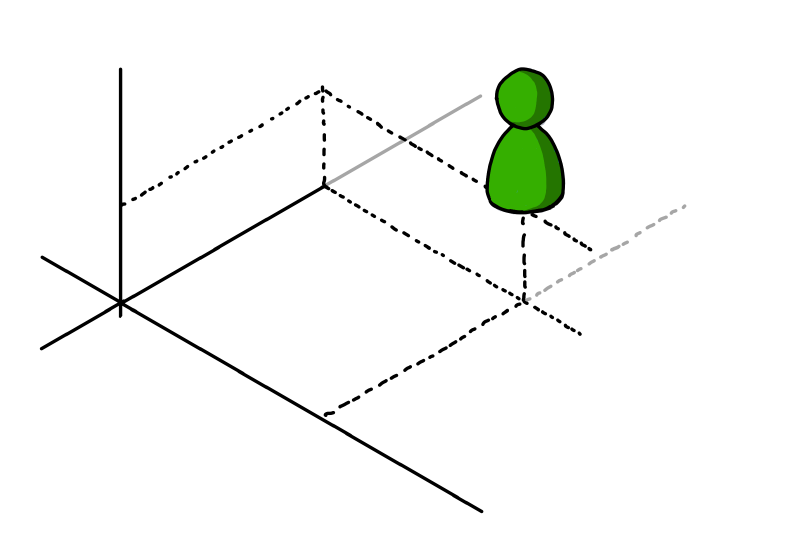

Here you are, living in your frame of reference, depicted as a nondescript coordinate system:

(I learned at university that axes without units are the worst. Have I told you how mental models are likely to be incomplete?)

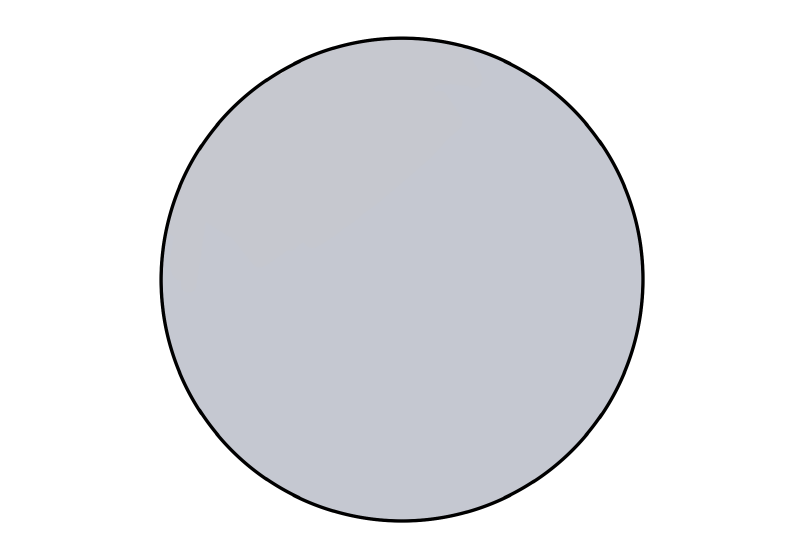

Your knowledge about the application

This is what you, the developer, could theoretically know about the application:

You, the user

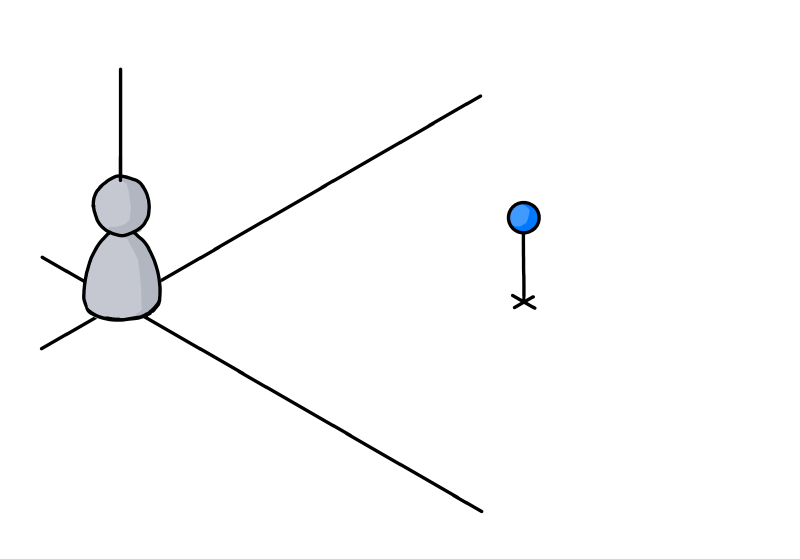

Now you try to imagine “the user”. Not being the developer of the application, the user is in a different situation:

A first step is trying to put yourself in the other person’s shoes:

The user’s knowledge about the application

You assume that not being the developer means that you cannot have knowledge of the inner workings. As a user, you only have the UI, the manual and other public sources at your disposal for understanding the application. This means that the user inevitably must know less about the software:

Next you are trying to employ some empathy. You think that it is unrealistic that user has read and understood all publicly available information. So most likely the user will know less than theoretically possible:

(Let’s ignore the relative sizes, you get the idea)

But: You are not the user

Always be aware that it is not you, but somebody else in the situation you are looking at:

This person could be highly motivated to use your application. Never underestimate the amount of work people will put into learning something if they feel it enables them to create results that are worth it. Just think how deep some people dig into Adobe After Effects or Microsoft Excel.

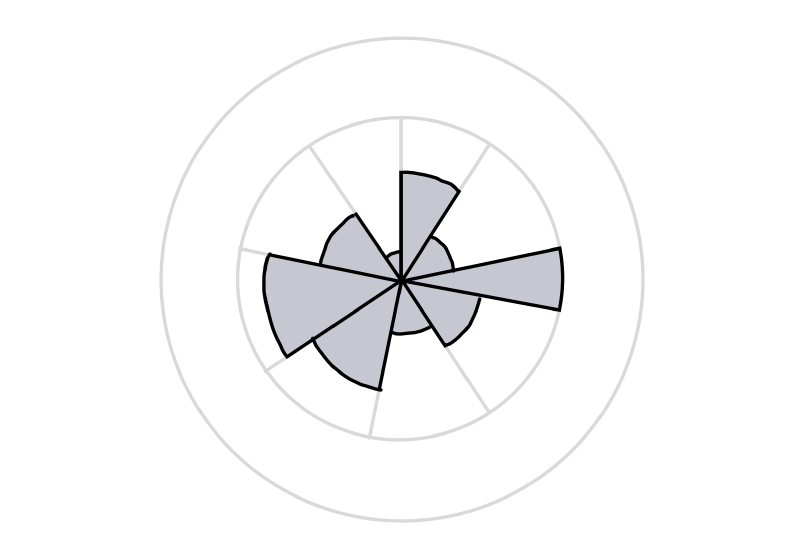

But in general, users most likely acquire just enough knowledge to get the job done. This means that they will know next to nothing about some features. At the same time, they can be very proficient in other features if a task at hand demands it.

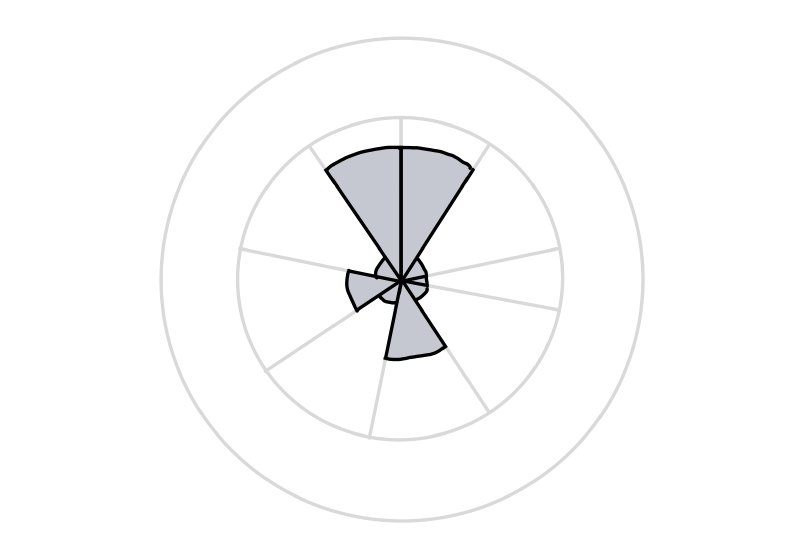

So the graph depicting the user’s knowledge could look like this, with the sectors standing for parts of the application or large features:

Another user

A different person…

…may use the application for other purposes.

He or she may have more or less experience, in different areas:

A good start, but not quite there, yet

At this point, we have established that you are not the user and that different users have different needs and thus varying levels of knowledge.

But we are still stuck in our own point of view. For instance, we assess the user’s knowledge in terms of the application’s structure as we see it from a developer’s perspective. Even if we consider scenarios during development, we still tend to think in application parts as well as larger or smaller features.

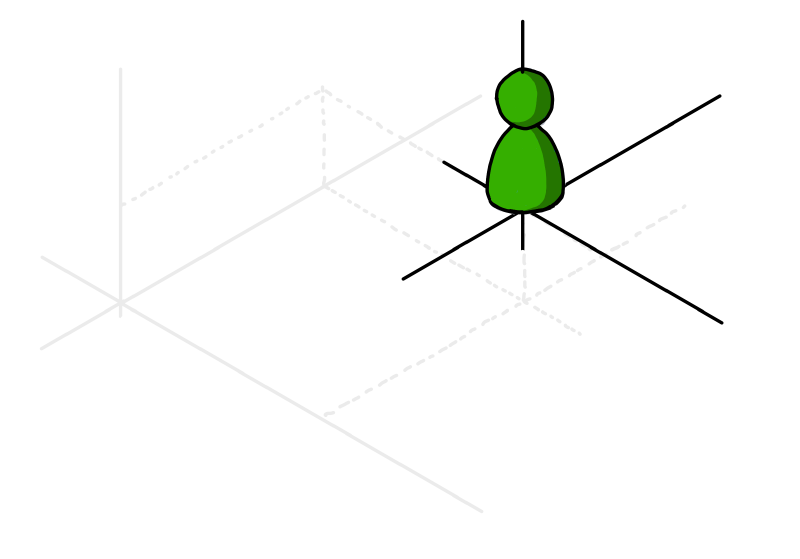

Next: Stop thinking in your frame of reference

The next step is to acknowledge that users have a different frame of reference. As developers, we work on our software for long periods of time, which gives it a high importance in our daily life.

For users, our software is just another application. For them, is is not exactly the center of the universe.

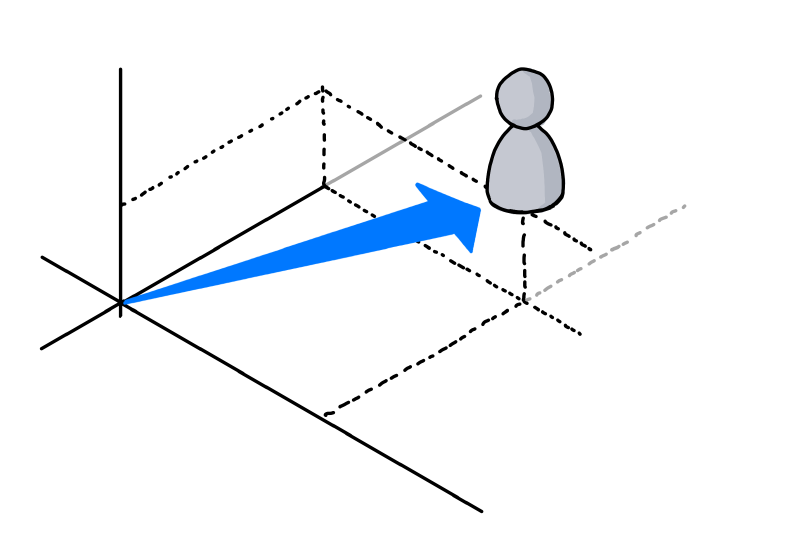

Or, in other words, they live in their own coordinate system:

As an experiment, try to watch yourself how you use and experience the software you did not develop yourself. How different applications, tools and utilities have different importance. And how some software simply does not excite you enough to really learn more than the absolute basics about it.

Now capture that feeling. Be assured, some user out there will feel the same towards your application. A user like this may never become a big fan of your software, but if you design for them, you automatically help everybody else.

If you…

- lead the eye to the right points (by visual design, not a way-too-long onboarding experience)

- write clear UI texts (that even people can understand who do not want to read) and

- design features that are not simply thin UI layers over existing APIs (because users do not think in APIs),

everybody wins.

Using the software vs. getting the job done

Some application features map directly to a user scenario, some scenarios may span multiple features.

But be aware that “real world” scenarios do not necessarily start and finish inside your software. Users may start with ideas, thoughts, purpose, constraints, raw data, etc. outside your application. The work done inside the application may be a first step towards something very different (which means that e.g. a simple CSV export feature can be more important than the fancy result display that you developed). And in between, the scenario may take the users to use other software.

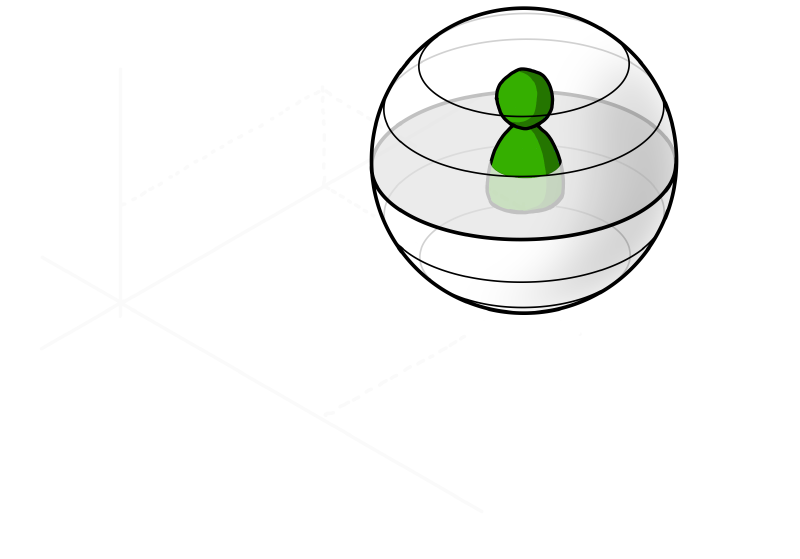

A person in the situation of achieving a specific goal will likely have a different view of the world. To visualize this, think of a coordinate system that looks very different from yours:

Things to remember

- The way you view your application is influenced by your knowledge of application parts and individual features.

Other people may view it in a different way, based on what they see along their path through the user interface. - Assume that people do not actually want to use your software, they want to achieve a specific goal.

If that aligns with your software, good for everybody. - Be aware that even though you and your users have the software as a connection point, your world view may be very different

As a visual reminder, imagine yourself in a cartesian coordinate system and the user in a spherical coordinate system with a different origin. Neither of you lives in the “correct” or “better” coordinate system, they are just different. But the other coordinate system surely does not have your software as the center of the world, that is for sure…

P.S.

If you are interested in a (non-technical) example of completely different coordinate systems, take a look at my post “Exercise in Thinking: Do Racing Stripes Make a Car Go Faster?”.

-

Exercise in Thinking: Do Racing Stripes Make a Car Go Faster?

The progress from a junior to a senior software developer shows in various ways:

- Coding: Years of working with different languages, frameworks, libraries and architectures help developing a gut feeling for technology.

- Planning: Having experienced a large number of projects or product releases with their milestones, last-minute changes, hotfixes, updates, etc., senior developers usually have a better sense of what to do and what to avoid.

- Analytical thinking: (Good) senior developers tend to ask the right question at the right time, digging deep to find out what they don’t know – and whether what they think they know is true.

For developers, improving their analytical skills is an important part of personal growth. That is why I like to cover non-technical topics both in my conference talks as well in my blog articles.

In a previous post called “How to Approach Problems in Development (and Pretty Much Everywhere Else)”, I wrote about (among other things) what Indiana Jones can teach you about problem solving.

The article you are reading now asks whether “racing stripes” make a car go faster. A strange question on a software development blog. This is by design, because strange things tend to be remembered more easily (fun fact: The word “strange” can be translated to German as “merkwürdig”, which in a literal translation back to English could be “worthy to be noticed” or “worthy to be remembered”).

So: Do Racing Stripes Make a Car Go Faster?

When being pressed for a quick answer, most of us would likely go for a “No”.

On second thought, various considerations come into mind:

- Is the racing stripe applied in addition to the existing paint job?

- What about the weight of the paint for the racing stripe?

- What if the stripe is not done in paint, but with adhesive film?

- Or what if the whole paint job is replaced with a design including a racing stripe – and lighter paint is used?

- How much does the weight of the paint influence the car’s performance, anyway?

- …

Many well-intentioned questions, unfortunately focusing too much on details. On the other hand, one important question is missing: What kind of “car” are we talking about here, exactly?

What if the question is actually about toy cars?

- The car in question is now significantly smaller, while the size of the air molecules stays the same. Does aerodynamics play an important role here?

- Does the car have an engine? If not, is the car intended to roll down a hill? Maybe then more weight could be beneficial?

But: Are we not missing something here? If “car” was not what we expected, are our assumptions about “faster” still valid?

- What do we know about the context of the question?

- How exactly is “faster” determined?

Now imagine the following situation: You give two identical toy cars to a young child with an interest in cars. One car has a racing stripe (or a cool design in general), the other does not.

Now you ask the child “which car is faster?”, and if the child answers “the one with the stripes”, then this is the correct answer (for this child).

From our grown-up point of view, the faster car is the one that travels more units of distance per unit time. From the child’s point of view, the car that wins the race in their mind (“wroom, wroom!”) is the faster one. The laws of physics do not necessarily apply here.

Lessons learned

Be careful with your assumptions

Your personal experiences are an important foundation, but they can also mislead you. Be careful when assuming facts that were not explicitly mentioned. You may be subject to a misunderstanding. And even if not, “obvious” questions can still yield helpful information. If you have the chance to ask, use the opportunity. You never know when a question like “just to be sure, we are talking about X that does Y?” is answered “yes, exactly… well, ok, Z should be mentioned, too” – with Z being a vital detail.

Make sure terms are clearly defined

When you talk about X, make sure that you and everybody else agrees on what X is exactly. Take the term “combo box”, for example. Even though the word “combo” clearly hints at a combination (of a dropdown list and a text field), some people use the term “combo box” also for simple dropdown boxes.

From a technical point of view, this may be only “half wrong”, because in certain UI technologies, a combo box control can be turned into a dropdown list as an option.

But when speaking to colleagues or customers, this may lead to unnecessary confusion or even wrong expectations.

Be sure to know the context

A car on the street is different from a toy car in the hands of a young child.

A software that is a pleasure to use on your laptop while sitting on the couch can be a pain to use on a smartphone while trying to catch a train.

And for transferring huge amounts of data from location A to location B, a truck full of hard drives can be both a viable solution as well as a terrible idea.

Context matters. A lot.

Fully respect the target audience and their view of the world

This is where empathy comes into play, “the capacity to understand what another person is experiencing from within the other person's frame of reference, i.e., the capacity to place oneself in another's shoes” (quote).

For technical-minded people, empathy may sound like a touchy-feely topic, “about being nice to people”. For me, empathy in software development is about respect towards users and about being a professional.

If you are interested, I have published two articles about empathy with a focus on software development here on this blog (“The Importance of Empathy” and “It’s Good for You, Too!”).

-

How to Approach Problems in Development (and Pretty Much Everywhere Else)

When you plan a feature, write some code, design a user interface, etc., and a non-trivial problem comes your way, you have more or less the following options:

- Attack – invest time and effort, give it all to make it happen, no matter what!

- Sneak around – identify alternative options, or find a way to drastically limit the scope to reduce the amount of work.

- Postpone – do nothing and wait until a later point in time; if the problem hasn’t become irrelevant by then, you know that it’s worth to be dealt with.

- Don’t do it – sometimes you have to make the hard decision to not do something.

As obvious as this seems to me now that these options exist: When I look back at my younger self more than twenty years ago, fresh from university, I was all about attacking problems head-on, because problem-solving was fun! Which sometimes lead to me spending too much energy at the wrong location, at the wrong time. In this blog post I try to write down some words of wisdom which my younger self may have found useful – maybe it helps somebody else out there.

Do a minimum of research

You want to work on the problem, you are eager to write code, but hold on for a second. Don’t dive into it head first. Always imagine a meeting in the future where one of the following questions is asked:

- “What exactly do you know about the problem?”

- “What have other people done so far?”

- “Are there samples/libraries/frameworks? What can we at least learn from them?”

- And of course the killer question: ”Why didn’t you think for a second about X?” (with “X” being super-obvious in your context)

You definitely want to know as early as possible about critical obstacles, e.g.

- the only choice for an algorithm for your envisioned approach has exponential complexity,

- you require hardware performance that your target system simply cannot provide,

- you have nowhere near enough pixel space in your user interface to display an envisioned feature, or

- your application only makes sense if your users behave in a completely unrealistic way in the actual usage context.

if you don’t do anything else, gain at least a basic understanding what you don’t know. You can deal with a “known unknown” on a meta-level, prioritize it, think of possible risks, talk to other people about it. An “unknown unknown”, on the other hand, can sneak up to you and hit you at the worst possible moment.

Do not confuse means and ends

As developers, we’re problem solvers – that is a good thing. But it sometimes makes us concentrate on the means (i.e. the technical solution to the problem) instead of the ends (what exactly do we want to achieve?). We all know these situations in meetings where no progress is made until somebody broadens the focus.

Be that somebody and ask: In which way does solving that one specific problem contribute to the overall goal? And: Is this the easiest way to achieve the desired result?

Think of the (in)famous “sword fight” scene from Raiders of the Lost Ark: Indiana Jones is facing a bad guy with a sword. Indy, on the other hand, does not have sword. With a narrow focus on the problem of not being able to participate in a (deadly) sword-fighting competition, finding a sword would be the next step. But the actual goal, though, is to get rid of the attacker as quickly and with as little risk as possible – which can be achieved by shooting the bad guy with a gun.

Here’s a real-life example that I often present in my UI/UX talks:

My software for the LED advertising system in the local sports arena uses pixel shaders for controlling the brightness of the displayed images and videos. The UI consists of a slider (0-100%) which I move until the apparent LED brightness looks right for the lighting in the arena (which is not constant due to necessary warm-up/down-down phases of the old lamps).

It was very easy to modify the pixel shaders to influence opacity or displacement of pixels, which gave me the idea for smooth transitions between different content sources for the LEDs. My first choice for the UI: the same as for the brightness, a slider. Copy/paste, simple as that. And from a UI point of view, using a slider promised full flexibility in regard to timing and dynamics of the transition. But what was the actual goal? Perform a smooth transition to the next image or video to be shown. For that, flexibility was much less of a concern than consistency and reliability. And that could be achieved with a simple “Next” button that triggered a transition with a fixed duration of 0.5 seconds.

Tackle problems as early as necessary, as late as possible

How do you decide which problem to be solved first? Of course, if one problem depends on a solution for another, that dictates a specific order. Often enough, tough, you have a certain freedom to choose.

If that is the case, ask yourself whether you have to solve a specific problem now. If you postpone working on something that is more or less isolated, there’s always a chance that priorities change. Something that was essential to reaching a specific goal suddenly becomes obsolete because the goal no longer exists.

On the other hand, be careful when delaying work, especially if you don’t know much about possible challenges. If part “A” of a system depends on part “B”, young developers like to work “bottom up”, first building “B” into a nice foundation that will make things easier when it comes to working on “A” (I have been there, too). But you definitely want to avoid a situation where the thing that was supposed to use your framework has known or unknown “unknowns“ that turn out to be insurmountable obstacles. So better make sure to have a working proof of concept and work from there.

This is related to the conflict between the (deliberately) very narrow scope of work items, tasks, user stories, etc. on one hand, and the question when the broader architecture of a system should be planned and implemented. Possible approaches range from BDUF (big design up front) in an almost “waterfall” fashion up to doing only the absolute minimum at a given time combined with continuous refactoring whenever needed. The truth is somewhere in between.

The secret is to find the right balance between…

- You ain’t gonna need it (YAGNI): Do not implement things when you just foresee that you need them. For example: extension points in your software that later are never used (but have to be maintained nevertheless). Or architectures with swappable components on a fine-grained level – if you’re not 100% sure that this is vital for the success of the software.

…and…

- Do it or get bitten in the end (DOGBITE): While you can get away with not doing something for a long time (all in with the best intentions of delivering value to the user of your application), postponing some work items can really come at a high cost. For example, if you want to have an undo-redo feature in your finished product. In theory, you could add that with a refactoring at a later time. In practice, good luck with getting the budget for stopping all feature work for weeks of refactoring late in the development.

In my experience, consider YAGNI as the rule, but don’t use it as “kill all”. Missing a DOGBITE situation is bad and will haunt you for years.

Ask yourself:

- Do you have a rough idea how you would add a feature at a certain point? If yes, that’s good enough, don’t add code as a preparation.

- Are you doing things now that actively prevent adding a feature in the future, or at least make that very expensive? Try to avoid that whenever possible.

- If you consider something DOGBITE, would you be able to defend it if somebody would grill you on that? You better be, because that’s what will happen at some point.

Heed the universal truths

- Everything is more complicated than it appears at first sight. If it doesn’t, you haven’t asked the right questions yet.

- Truly generic, reusable solutions are hard. First get the job done, then (maybe) think about reuse.

- “Good enough” is perfectly fine most of the time.

- Leaving things out is usually the better choice than adding too much up front. Once something (an API, a feature or some UI) is in your software, removing it will make somebody unhappy.

One more thing…

The Wikipedia article comparing the Amundsen and Scott Expeditions is very interesting. You’ll quickly understand why I mention it here.

-

The Importance of Empathy, pt2: It’s Good for You, Too!

In my recent blog post I described empathy as a valuable skill e.g. for UI design. But empathy is more than just “that UX soft skill thing”, “for making other people’s lives better”.

In this post I’ll give two real-life examples where a lack of empathy significantly reduced the chances of success in a competitive situation – which should give food for thought even to the most selfish person.

Example 1: Programming Contest Entries

In 2004 I entered a competition for Visual Studio add-ins organized by Roy Osherove. Surprise #1: I won. Surprise #2: The blog entry “About the add-in contest results” by Frans Bouma, one of the judges.

He wrote: “As a judge in the add-in contest, I had to test all the add-ins submitted, and judge them on quality and if they deserved any of the big prizes available. I can tell you it was hard. Not because there were so many good entries, but because it was often very hard to even get the submitted code to run, left alone to find any documentation what to do, how to get started.”

Two years later I was in Frans’ shoes when I was a judge on a German contest for “code snippets” (more in the vein of small classes and helper methods than typical Visual Studio snippets e.g. for defining a constructor or a dependency property). I had to wade through more than 120 entries, with many lacking an easy to understand description of “what does it do”, “how do I use it”, etc.

In both contests, not nailing the basics substantially influenced the judgement and thus prevented some of the entries from ranking higher.

What went wrong?

One possible short answer: People were lazy. They did the fun part (writing code), but skipped on the non-fun part (writing docs, taking care of setup, etc.). But on the other hand, people tend to overcome their laziness if they feel some kind of urgency.

In the case of the contest, it seemed that many of the participants didn’t think that the out-of-the-box experience would be a factor in the review – which hints at a lack of empathy. Because with a bit of empathy, they would have thought about the situation of a contest judge.

Let’s imagine that you are a judge for a programming contest. You unpack the ZIP file containing a contest entry and you get a bunch of files you’ve never seen before.

What would be your first questions? Surely something like:

- Where do I start?

- Is there some kind of README?

- Is there a short introduction, maybe an elevator pitch for this?

- What is actually the problem this thing is trying to solve?

- What are the first steps that I should take?

- Can I simply follow the directions or are there prerequisites that aren't mentioned?

- Is there a simple way to achieve at least something in the software quickly before I dig deeper into it?

Imagine you have to review not only one or two, but dozens and dozens of entries. Wouldn’t you be thankful for any entry that addresses these questions proactively?

Now, after looking at a judge’s perspective: Would you, as the author of a contest entry, assume that a judge instantly sees how awesome your software is, without any explanation beyond a few words? And that none of the other contestants would come up with the idea to make things easier for the judges and thus make a good first impression?

If you can now almost feel the pressure of competition – there’s the urgency required to overcome the laziness and the reluctance to do the things that are not fun.

Example 2: Conference Session Proposals

I’m one of the organizers of the community conference “dotnet Cologne” which is wildly successful and has become the largest conference of its kind in Germany. One of the many things to do for the organizers in the months before the conference is to review a huge number of session proposals entered during the “call for papers”. We have a web application where potential speakers enter their bio and the abstracts for their talks, which makes reviewing and comparing the entries pretty easy.

Unlike the first example, this is not so much of a time problem, even for a large number of entries. It’s more a problem of ”guesstimating” the scope and the quality of the session if a speaker isn’t one of the rock stars who consistently deliver a certain level of quality. After all, an abstract is just a declaration of intent, not the actual talk. And in many cases, the talk is not even fully fleshed out at the time of the call for papers.

Each year, there are a number of speakers who provide incomplete or very low-quality information and who in consequence are cut first while narrowing down the selection.

What went wrong?

The speakers somehow must have thought that the information they entered was good enough to be selected. Or that, if necessary, more information could be provided on demand at a later time.

Imagine you are in the situation where you have to judge a large number of speakers and their session proposal by reviewing the available information.

- What impression would no or low-quality text in a speaker bio leave on you?

- What would you think about a “more text upon request” in a session abstract?

- Or a “coming later…” that never gets updated?

The larger the number of session proposals, the higher the chances that several speakers propose a session about the same topic (or at least have a large overlap of content). In this case there’s simply no reason to choose the speaker with the lower quality bio and abstract.

Now switch the perspective to that of a speaker: Wouldn’t you wonder what effect providing incomplete information would have? Especially if you compare it to the many speaker bios and session abstracts that you can read (for previous years) on the website of the exact conference that you want to speak at? And wouldn’t you want to avoid the impression that you either don’t have enough time to prepare the talk, or worse, aren’t able to present information in a compact and structured way?

Wrapping up

Empathy is not necessarily about being a nice person. It’s about being professional. Putting yourself in other people’s shoes, even if just for a moment, is always a good idea in general, but even more so in situations where you want something from someone else.

-

The Importance of Empathy

Empathy is (quote) “the capacity to understand what another person is experiencing from within the other person's frame of reference, i.e., the capacity to place oneself in another's shoes”.

Putting yourself in other people’s shoes

If you work on a piece of software, be it as a developer, a designer or a manager, then in your frame of reference, your software is the center of the world. It’s very tempting to think that in principle, people really would like to use your software, and that UX issues can be solved by improving the existing UI. Which isn’t wrong per se – after all, careful wording of UI texts, meaningful placement and spacing of UI elements does work wonders. On the other hand, it’s easy to fall into the trap of tweaking things up to no end instead of questioning the overall interaction, i.e. “lipstick on a pig”.

In the frame of reference of the user, your software is just a thing that helps him/her to get things done. Users usually don’t actually want to use your software. They want to reach a specific goal and they know or least suspect that your software may be a way (or: one way) to achieve the desired result. That result is important to the user, not the software itself.

If a user has problems with the software, that user will not necessarily think “oh, how much I’d like to use this excellent piece of software, I think I’ll read the tutorial”. No; in the user’s frame of reference, the software is a problem. Whether the user solves the problem (e.g. by putting more effort in learning the software) or avoids it (by using a different software or no software at all) depends on the assumed outcome and how valuable it is to the user.

Excercise in empathy: Is it worth learning git?

Let’s take a little detour: The distributed version control system (DVCS) called “git” is a complicated piece of software that draws criticism and ridicule on one hand, but is powerful enough to be in widespread use on the other hand. The situation in today’s software development is pretty simple: If you need to do the things that git does very well and/or if you (intend to) work on a project that is using git, you take the plunge and learn it, period.

I do not use git at the time of this writing (update: I switched to git in 2017). At work, I use the company-provided TFS, so I don’t need git there. And at home I chose Mercurial many years ago, at a time when git wasn’t that popular on Windows yet.

If you are an avid git user, it is a perfect excercise in empathy to think about the reasons why I haven’t switched yet. You have to forget about the reasons why you love git and keep my frame of reference in mind.

I use Mercurial for my (closed source) hobby projects, working both on my desktop at home and my laptop on the road. I spend quite some time on my hobby projects, but of course, this time is limited (other hobbies, time with the wife, etc.). Switching the version control system takes away from that time; time that I could use to write that new feature that I have in my head for so long.

So in this case, the outcome of switching isn’t valuable enough. Imagine you had written a tool for a Mercurial-to-git repository conversion and would wonder whether a blue button or a green button in your tool’s UI would increase the probability of me switching – that would be the wrong question, caused by the wrong frame of reference.

The ability to leave your current point of view for a moment and to put yourself in other people’s shoes is a highly valuable skill. Of course it does not mean that you have to agree with the other point of view (otherwise police profilers would all have to be serial killers), but leave your experiences, opinions and judgements out of the equation.

Empathy in software development

Virtually all software projects have a limited budget. You have to prioritize – you cannot implement all features you’d like, you cannot make everything perfectly usable, you cannot create the perfect user experience.

If you want to design the user interface for your software, you have to decide where to spend your money. Of course you can and should talk to your users, but that’s not always easy and/or economically feasible. And you obviously have to make many decisions without direct feedback. Understanding the point of view of the user, having the empathy to forget your own ego will help you a lot.

In the UI, you want to make things simple. To achieve that:

- Understand that everything you show on the screen is an obstacle on the way to achieving a specific goal: Even helpful information on the screen has to understood, and decisions require thinking about the consequences.

- Remember that the cognitive load of a UI is much higher when you open it as a user twice a week vs. when you click through it over and over again as a developer/UX specialist.

- Try to “forget” the background information that you have – and accept that you won’t fully succeed. Still, it helps you to find the most obvious problems e.g. in confusing and/or misleading UI texts.

- (And maybe read the book “Simple and Usable” by Giles Colborne for more on dealing with complexity)

At the same time, you cannot make everything simple. And it doesn’t necessarily has to be.

Take Excel for example. The number of Excel sheets created by non-IT-people that drive business processes across the world is amazing (and a bit frightening, but that’s a different story). Many of these pretty complex sheets were created by people without a formal Excel training course. They somehow learned to use the application by themselves, e.g. by committing the unthinkable act of reading the online help manual (gasp!).

This is a great example that people do not necessarily give up easily when facing complexity. It’s just a matter of how much they are motivated by the promise of achieving their goals.

It’s not always easy to predict when users put in extra effort, and when not. That’s why it is so important to talk to actual users to find out more about their goals, desires and fears.

For typical UI interactions, experiences with non-IT-people among friends and relatives are – if you observe carefully and look for patterns – a great source for developing empathy with users.

When developing e.g. business software, even a single user interview can provide valuable information about processes, office politics, who should not be able to do what without somebody else checking first, etc. This information then helps you when making empathy-based decisions. Nothing can replace testing in the real world, but a little empathy can make a huge difference and move your user interface from “awful” to “good enough”.